Serverless has been one of my favorite topics in my cloud journey. It’s really a concept that makes a developer’s life simple, no provisioning of resources, no addition of autoscaling group just put your logic and magically everything happens in the background!

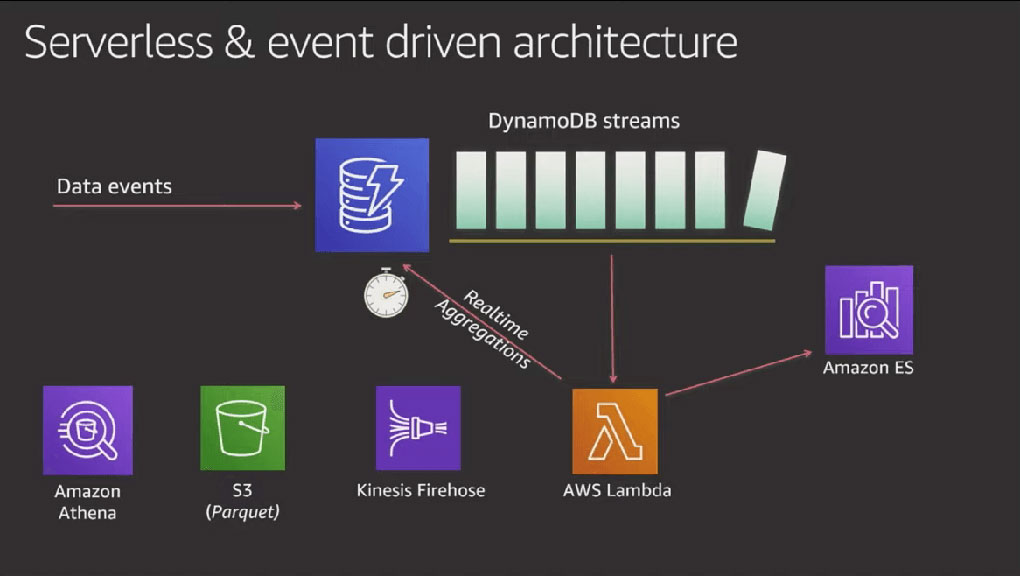

But wait there is more to it! At times serverless is thought as something which is for stateless or small executions, but that’s not the case. You can design a large scale serverless architecture and achieving states through event-driven executions.

What is exactly serverless?

Serverless doesn’t mean that there are no servers, in fact, it means that you as a developer don’t have to worry about provisioning servers, monitor them, or do anything else which you are not expert at. Serverless can also be thought of as Function as a Service (FaaS) where you take care of just your code and rest is managed by the cloud provider. In AWS there are various serverless services that we can use:-

- API Gateway

- S3

- DynamoDB

- Lambda

- Step Functions, Etc…

Why DynamoDB streams exists? And how they enable event-driven architecture?

Many applications may benefit from the ability to record changes to items stored at the time of changes in a DynamoDB table. Some examples can be:-

- An application in one AWS Region modifies the data in a DynamoDB table. The second application in another Region reads these data modifications and writes the data to another table, creating a replica that stays in sync with the original table.

- A popular mobile app modifies data in a DynamoDB table, at the rate of thousands of updates per second. Another application captures and stores data about these updates, providing near-real-time usage metrics for the mobile app.

- A global multi-player game has a multi-master topology, storing data in multiple AWS Regions. Each master stays in sync by consuming and replaying the changes that occur in the remote Regions.

- An application automatically sends notifications to the mobile devices of all friends in a group as soon as one friend uploads a new picture.

DynamoDB Streams enables solutions such as these, and many others. DynamoDB Streams captures a time-ordered sequence of item-level modifications in any DynamoDB table and stores this information in a log for up to 24 hours. Applications can access this log and view the data items as they appeared before and after they were modified, in near-real-time.

A DynamoDB stream is an ordered data stream comprising of changes taken place in a DynamoDB table. DynamoDB collects information about each change in the data items in a table when you activate a stream.

And this is how they enable event-driven architecture as any event (create, update, delete) in the DynamoDB table is captured and allows us to act on that.

Let’s dive into implementation!!

In this article, I try to explain how you can use DynamoDB (a serverless NoSQL database) to build event-driven architecture.

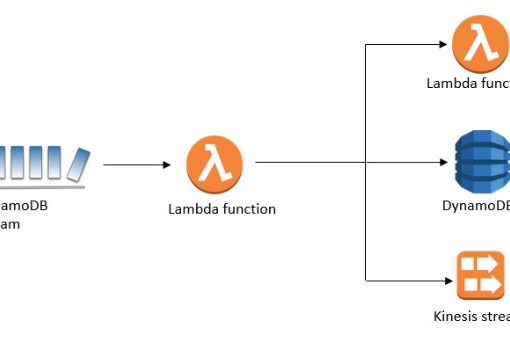

DynamoDB streams with lambda make it possible to respond to data changes.

Let’s start with the implementation!!

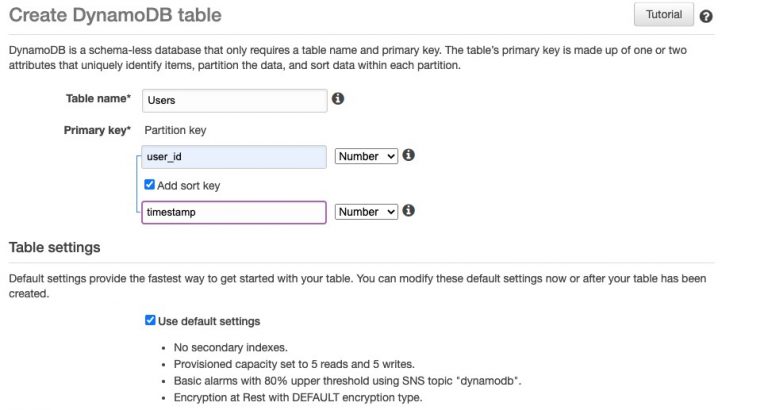

Step 1: Create a table. Here I use Users table with partition key being user_id and sort key being timestamp.

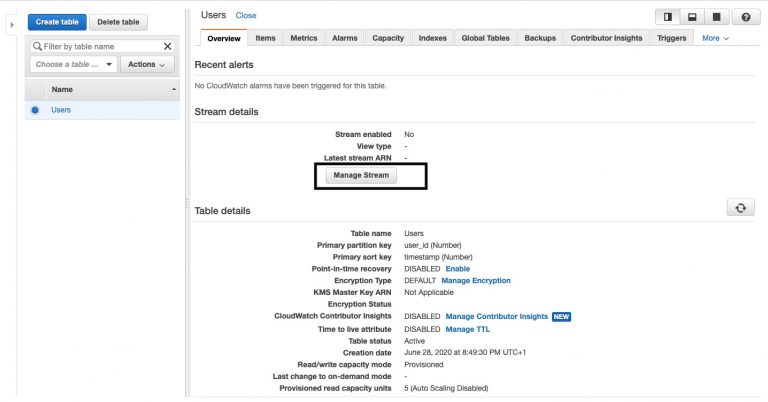

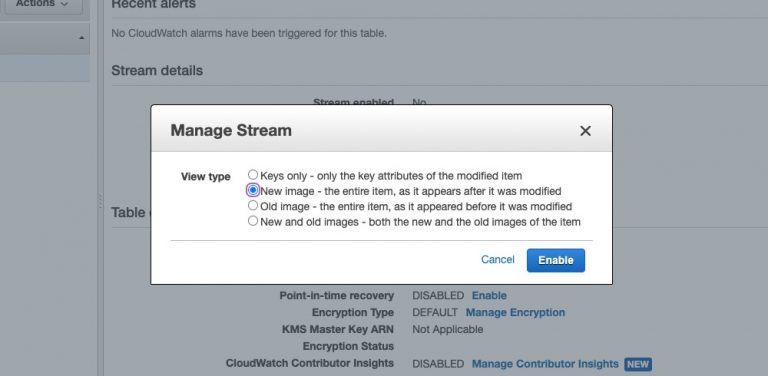

Step 2: Create a stream. Streams can record different changes based on various use cases but here we just use updated changes to data.

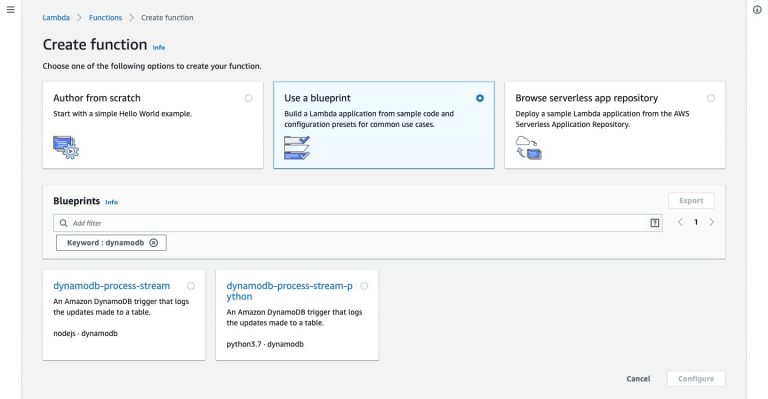

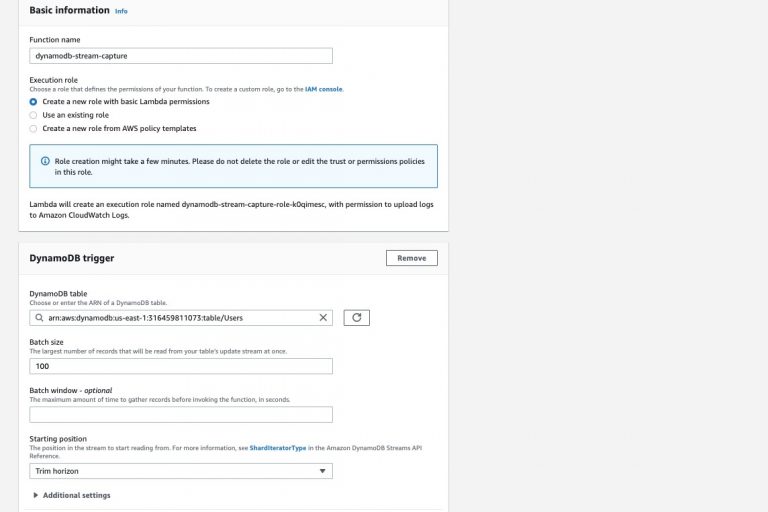

Step 3: Create a lambda function. We use lambda function from a blueprint for this article as it is easy to demonstrate and give you an idea of how to go about it. I name my function dynamodb-stream-capture.

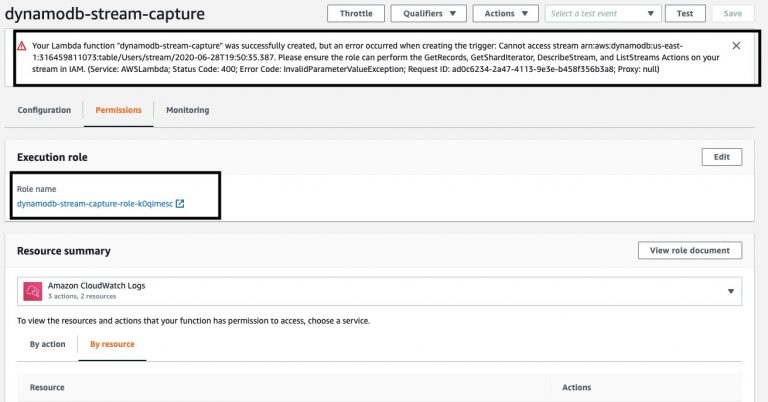

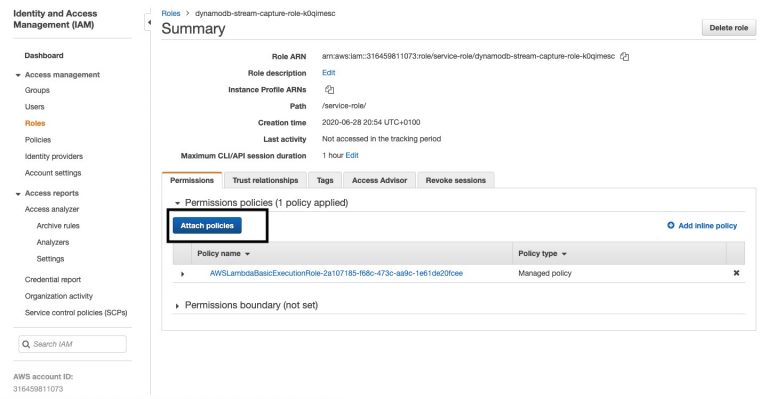

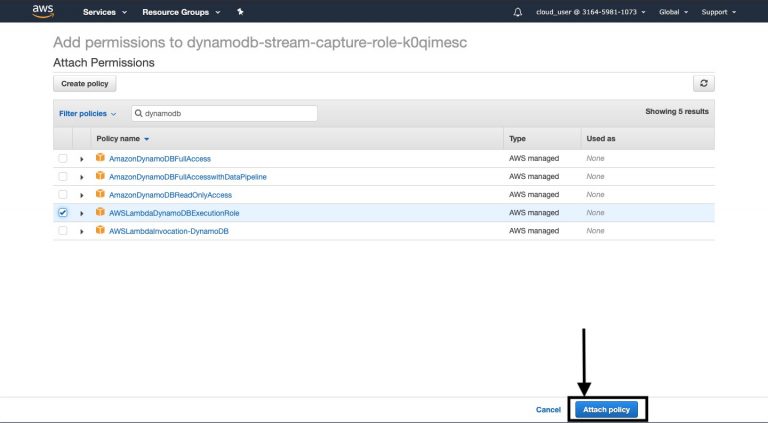

Step 4: Resolving dynamodb IAM permissions error. Click on the execution role to redirect yourself to the IAM role and follow along with me

If you refresh the lambda function page now the error would have been resolved.

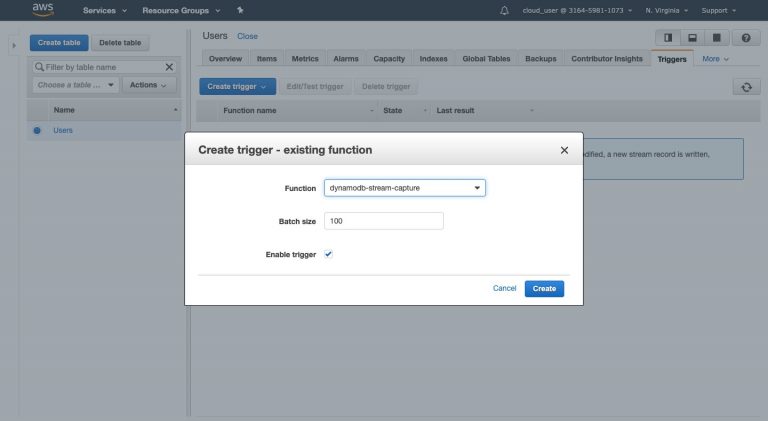

Step 5: A trigger would have been created by now for the dynamodb table.

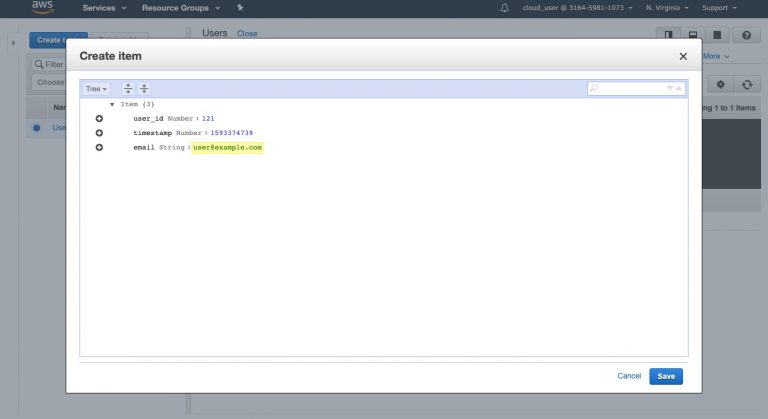

Step 6: Now insert, update, or delete data in your DynamoDB table.

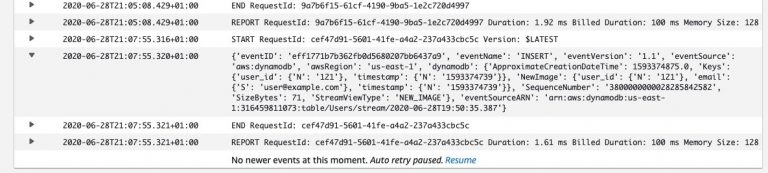

Step 7: By now changes will trigger your lambda function. To check this let’s visit the CloudWatch logs group created for our lambda function.

As you can see the response in CloudWatch gives the detail of the changed data in our table.

One use case for this concept can be sending out notifications or alerts to users when their data is changed. There can be a lot of things which you can do with this architecture as it is highly flexible and allows you to expand your imagination. Feel free to point out my mistakes, suggestions, or share what you implemented using this architecture.

Sajal Tyagi

Technical Consultant · DevOps Engineer · Quality Engineer · Software Engineer

Sajal Tyagi is a seasoned Cloud and DevOps Professional, pursuing MSc Management at Trinity College Dublin. He is Terraform, Vault, AWS Certified Solution Architect, Big Data and Oracle Autonomous Database Cloud specialist.

Workshop on Certified DevOps Foundation

Workshop on Certified DevOps Foundation Workshop on Certified DevOps Professional

Workshop on Certified DevOps Professional Get DevOps Certified

Get DevOps Certified