Q.1 What do you know about DevOps?

A.1. DevOps is a collaborative work culture, aimed to synergize the efforts of the development and operations teams to accelerate the delivery of software products, with highest quality and minimal errors.

DevOps is about automation of the entire SDLC with the help of automation tools to achieve continuous development, continuous testing, continuous integration, continuous deployment and continuous monitoring.

DevOps is ‘re-org’ of the existing resources where continuous improvement is seeked through learning from constant feedback.

DevOps fulfils all the requirements for fast and reliable development and deployment of a software. Companies like Amazon and Google have adopted DevOps and are launching thousands of code deployments per day.

Q.2. Why has DevOps become so popular over the recent years?

A.2. Let us understand the impact of DevOps through some examples:

Netflix and Facebook are investing in DevOps to automate and accelerate application deployment and this has helped them grow their business tremendously. Facebook’s continuous deployment and code ownership models have helped them scale up and ensure high quality experience at the same time. Hundreds of lines of code are implemented without affecting the quality, stability, and security of the product.

Netflix is a streaming and on-demand video company which also follows similar practices and has fully automated processes and systems.

Facebook today, has 2 billion users while Netflix streams online content to more than 100 millions users worldwide. This is how DevOps has helped organizations ensure higher success rates for releases, reduce the lead time between bug fixes, streamline and continuously deliver through automation, and reduce their manpower costs.

Q.3. What is the difference between continuous deployment and continuous delivery?

A.3. Continuous deployment is fully automated, and the product deployment till production needs no manual intervention; whereas, in continuous delivery, the deployment is done till QA or UAT or pre-prod environment and further deployment requires approval from the manager or higher authorities to be deployed in production. According to your organization’s application risk factor and policy, continuous deployment/delivery approach will be chosen.

Q.4. What is a version control system?

A.4. Version control systems(VCS) consists of a central shared repository where teammates can commit changes and maintain a complete history of their work. It gives developers the flexibility to simultaneously work on a particular artifact and all modifications can be logically merged at a later stage.

Version control allows you to:

- Revert files back to a previous state.

- Revert the entire project back to a previous state.

- Compare changes over time.

- Track modifications done by developer with details.

- Does not allow overwriting on each other changes.

- Maintain the history of every version.

There are two types of Version Control Systems:

- Central Version Control System, Ex: SVN

- Distributed/Decentralized Version Control System, Ex: Git, Bitbucket

Q.5. What is Git and explain the difference between Git and SVN?

A.5. Git is a Distributed Version Control system (DVCS) which manages small as well as large projects efficiently. It is basically used to store our repositories in remote server such as GitHub.

Its distributed architecture provides many advantages over other Version Control Systems (VCS) like SVN.

| GIT | SVN |

|---|---|

| Git is a Decentralized Version Control Tool | SVN is a Centralized Version Control Tool |

| Git contains the local repo as well as the full history of the whole project on all the developers hard drive, so if there is a server outage , you can easily recover code from local git repo. | SVN relies only on the central server to store all the versions of the project file |

| Push and pull operations are fast | Push and pull operations are slower compared to Git |

| Client nodes can clone the entire repositories on their local system | Version history is stored on server-side repository |

| Commits can be done offline too | Commits can be done only online |

Q.6. What is CI? What is its purpose?

A.6. Continuous Integration (CI) is a development practice that requires developers to integrate code into a shared repository, each time they make a commit.

The CI process:

- Developers check out code into their private workspaces.

- When they are done with it they commit the changes to the shared repository (Version Control Repository).

- The CI server monitors the repository and checks out changes when they occur.

- The CI server then pulls these changes and builds the system and also runs unit and integration tests.

- The CI server will now inform the team about the successful build.

- If the build or tests fail, the CI server will alert the team.

- The team will try to fix the issue at the earliest opportunity.

- This process keeps on repeating.

Hence, Continuous Integration as a practice forces the developer to integrate their changes every time so as to get an early feedback.

Q.7. What is Jenkins? What are the advantages of Jenkins?

A.7. Jenkins is an open source Continuous

Integration tool which is written in Java language.

It keeps a track on version control system and to initiate

and monitor a build system if any changes occur. It monitors

the whole CICD process and provides reports and

notifications to alert the concern team.

Advantage of using Jenkins:

- Bug tracking is easy at an early stage in development environment.

- Provides support for many plugins to interface with various automation tools.

- Build failures are cached at integration stage.

- For each code commit change, an automatic build report notification get generated.

- To notify developers about build report success or failure, it can be integrated with LDAP mail server.

- Achieves continuous integration agile development and test-driven development environment.

- With simple steps, Maven release project can also be automated.

Q.8. Why are configuration management processes and tools important?

A.8. There are multiple software builds,

releases, revisions, and versions for each software or

testware that is being developed in a CICD pipeline. There

is a need for storing and maintaining data, keeping track of

development builds and simplified troubleshooting. CM tools

let you achieve these objectives.

The purpose of Configuration Management (CM) is to ensure the integrity of a product or system throughout its life-cycle by making the development or deployment process controllable and repeatable, thereby creating a higher quality product or system with consistent performance across environments.

Q.9. What is IaC? How you will achieve this?

A.9. IaC is programmable infrastructure,

where infrastructure is treated in the same way as any other

code. The traditional approach to managing infrastructure is

hardly used in a Devops scenario as manual configurations,

obsolete tools, and manual scripts are less reliable and

error-prone.

IaC can be achieved by using the configuration tools such as

Chef, Puppet and Ansible etc. These tools let you make

infrastructure changes more easily, rapidly, safely and

reliably.

Hence, unit testing and integration testing of infrastructure configurations, and maintaining up-to-date infrastructure documentation becomes quite manageable and accurate with IaC.

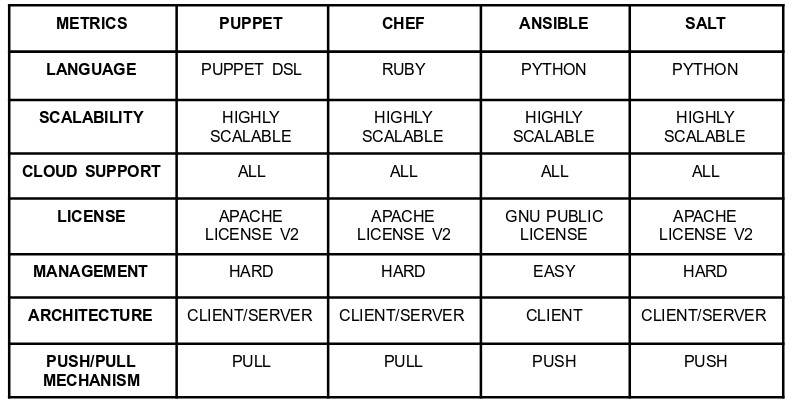

Q10.Which among Puppet, Chef, SaltStack and Ansible is the best Configuration Management (CM) tool? Why?

A.10. Puppet is the oldest and most mature Ruby-based Configuration Management tool. Puppet has only some free features, while the prominent features are available in the paid version only. Organizations needing more customization will probably need to upgrade to the paid version, to use Puppet for CM.

Chef is also written in Ruby includes free features, plus it can be upgraded from open source to enterprise-level if necessary. On top of that, it’s a very flexible product.

Ansible is a very secure option since it uses Secure Shell. It’s a simple tool to use and it does offer a number of other services in addition to configuration management. It’s very easy to learn, so it’s perfect for those who don’t have a dedicated IT staff but still need a configuration management tool. Ansible is the only CM tool which is agentless and does not need any additional software installed on remote machines, which makes it the most favoured choice.

SaltStack is python based open source CM tool made for larger businesses, but its learning curve is fairly low.

Q.11. What is Ansible?

A.11. Ansible is a software configuration management tool to deploy an application using ssh without any downtime. Ansible is developed in Python language and is mainly used in IT infrastructure to manage or deploy applications to remote nodes.

If we want to deploy one application in 100’s of nodes by just executing one command, then Ansible is the one agentless tool which provides you this ability seamlessly.

Q.12. What are playbooks in Ansible?

A.12. Playbooks are Ansible’s configuration, deployment, and orchestration language. They can describe a policy you want your remote systems to enforce, or a set of steps in a general IT process. Playbooks are written in a human-readable, easy to understand text language.

Q.13.What is a Docker container? How do you create, start and stop containers?

A.13. Docker is a containerization technology that packages your application and all its dependencies together in the form of Containers to ensure that your application works seamlessly in any environment.

A container consists of an entire runtime environment: an application, plus all its dependencies, libraries and other binaries, and configuration files needed to run it, bundled into one package.

Containerizing the application platform and its dependencies eliminates any infrastructure differences and maintains consistency across environments.

They share the kernel with other containers, running as isolated processes in user space, on the host operating system.

Docker containers can be created by either creating a Docker image and then running it or you can use Docker images that are present on the Docker hub.

We can use Docker image to create Docker container by using

the below command:

docker run -t -i

<command

name=””></command>

This command will create and start container.

Docker container can be run in two modes:

Attached: Where it will be run in the foreground of the system you are running, provides a terminal inside to container when -t option is used with it, where every log will be redirected to stdout screen.

Detached: This mode is usually run in production, where the container is detached as a background process and every output inside the container will be redirected to log files which can be viewed by docker logs command.

To check the list of all running containers with status on a

host:

docker ps

-a

To stop the Docker container:

docker stop

To restart the Docker container you can use:

docker restart

Q.14. What is a Dockerfile used for?

A.14. A Dockerfile is a text document that

contains all the commands a user could call on the command

line to assemble an image.

Using docker

build users can create an automated build that

executes several command-line instructions in succession.

Q.15. What is a Docker image?

A.15. Docker image is the source of Docker container. Docker images are used to create containers. Images are created with the build command, and they’ll produce a container when started with run. Images are stored in a Docker registry such as registry.hub.docker.com.

Docker containers are basically runtime instances of Docker images.

Q.16. What is Docker hub?

A.16. Docker hub is a cloud-based registry

service which allows you to use pre-defined images, build

your images and test them, store manually pushed images, and

provide a link to Docker cloud so that you can deploy images

on your hosts.

It provides a centralized resource for container image

discovery, distribution and change management, user and team

collaboration, and workflow automation throughout the

development pipeline.

Q.17. What are the advantages that Containerization provides over virtualization?

A.17. These are the advantages of containerization over virtualization:

- Containers provide real-time provisioning and scalability but VMs provide slow provisioning

- Containers have a minimal OS, and share the kernel of the underlying OS. VMs have the full-fledged OS

- Containers are lightweight when compared to VMs

- VMs have limited performance when compared to containers

- Containers have better resource utilization compared to VMs

Q.18. What is Nagios?

A.18. Nagios is used for Continuous

monitoring of systems, applications, services, and business

processes etc. in a DevOps culture.

If a failure occurs, Nagios has alerting ability to inform

the technical staff about the problem, allowing them to

begin remediation processes before outages affect business

processes, end-users, or customers. With Nagios, you don’t

have to explain why an unseen infrastructure outage affect

your application’s performance in production environment.

By using Nagios you can:

- Plan for infrastructure upgrades proactively, before outdated systems cause failures.

- Respond to issues at the first sign of a problem.

- Automatically fix problems when they are detected.

- Coordinate technical team responses.

- Ensure your organization’s SLAs are being met.

- Monitor your entire infrastructure and business processes.

Q.19. What is NRPE (Nagios Remote Plugin Executor) in Nagios?

A.19. The NRPE addon is designed to allow you to execute Nagios plugins on remote Linux/Unix machines. The main reason for doing this is to allow Nagios to monitor “local” resources (like CPU load, memory usage, etc.) on remote machines. Since these public resources are not usually exposed to external machines, an agent like NRPE must be installed on the remote Linux/Unix machines.

Q.20. How do all the automation tools work together in a CICD flow?

A.20. In a CICD pipeline, everything gets automated for seamless delivery. However, this flow may vary from organization to organization as per the requirement.

A generic DevOps flow would be:

- Developers develop the code send this code to the Git repository and any changes made in the code is committed to this Repository.

- Jenkins pulls this code from the repository using the Git plugin and build it using tools like Ant or Maven.

- Unit testing is performed using JUnit kind of framework. (JUnit plugin available in Jenkins).

- Once, the application is packaged in an executable file, it is pushed to an artifactory like JFrog and then, a Docker image comprising of the application (pulled from artifactory) and all its dependencies is created.

- Configuration management tools like Ansible deploy this image across environments with the environment specific configuration settings.

- Once it reaches testing environment, Selenium is used to run the automated test cases.

- Then, the image is deployed in pre-prod or staging environment by Ansible, and integration testing is done.

- Once the code is thoroughly tested in each of these environments, Jenkins send it for deployment on the production server (even production server is provisioned & maintained by tools like Ansible).

- After deployment, continuous monitoring is done by tools like Nagios and logs are maintained and interpreted by tools like Splunk (log aggregator).

Workshop

on Certified DevOps Foundation

Workshop

on Certified DevOps Foundation Workshop

on Certified DevOps Professional

Workshop

on Certified DevOps Professional Get

DevOps Certified

Get

DevOps Certified